resources: frame-context collapse and OpenAI’s on alignment research (before all the safety disband)

The act of aligning oneself with a particular group or ideology. This can be done for a variety of reasons, including:

- To gain social acceptance

- To gain power

- To gain resources

thoughts

The real challenge isn’t preventing some hypothetical super-intelligence takeover, rather figuring out how to make AI systems that genuinely enhance human capability while remaining accountable to human values. I’m optimistic about this because it’s fundamentally an engineering problem, not an existential one.

Often known as a solution to solve “hallucination” in large language models token-generation. 1

To align a model is simply teaching it to generate tokens that is within the bound of the Overton Window.

The goal is to build a aligned system that help us solve other alignment problems

Should we build a ethical aligned systems, or morally aligned systems?

One of mechanistic interpretability’s goal is to ablate harmful features.

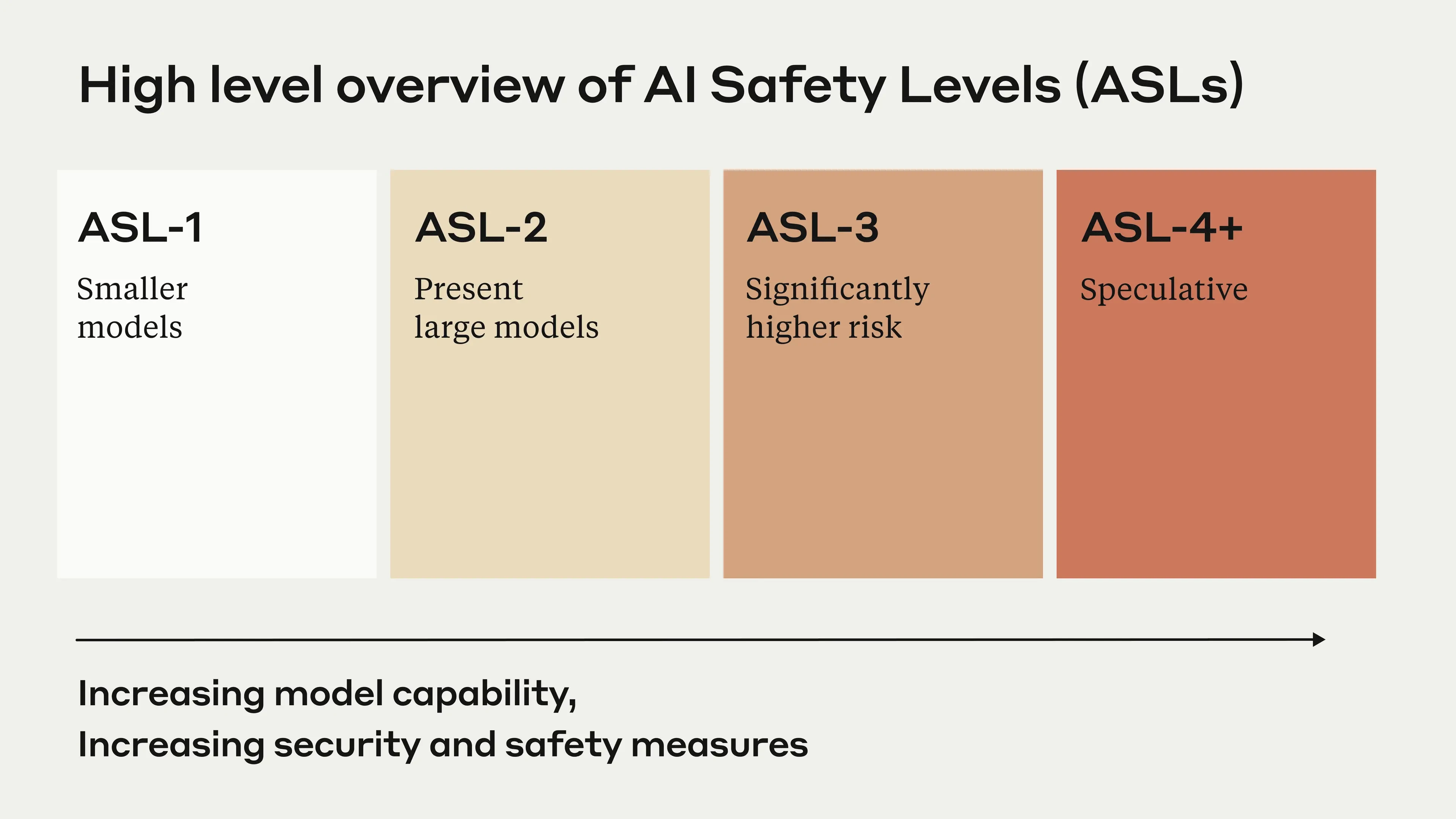

RSP

published by Anthropic

The idea is to create a standard for risk mitigation strategy when AI system advances. Essentially create a scale to judge “how capable a system can cause harm”